Continuing from our first impressions post about logs, we’ve been diving deeper into log files on a more regular basis here at periscopeUP. We found a number of other ways to use log files other than for indexation and crawling issues. Recently we looked into using log files to control bots and block spam bots.

Continuing from our first impressions post about logs, we’ve been diving deeper into log files on a more regular basis here at periscopeUP. We found a number of other ways to use log files other than for indexation and crawling issues. Recently we looked into using log files to control bots and block spam bots.

When a bot hits your server it uses resources on your server to scan and crawl the website. This sounds normal, but what happens if a bot decides to crawl again and again and again in a matter of 5 minutes? What happens when you have a bad bot and a rogue crawler?

Are technical issues slowing down your site? Contact Us and find out.

Crawl-Rate – Robots.txt

Ever wonder why your server is slow to respond or why it loads fast one day and slow the next? It could be a bad bot. Taking your log files and using a tool like ScreamingFrog Log File Analyser allows you to look at the number of bot hits in a single day or over span of time.

Nearly every website log file we’ve reviewed shows bingbot crawling around 2-3 times more frequently than any other. This might just be how Bing crawls, but it can overwhelm a large website with many active users. Bing isn’t trying to be malicious or cause problems, it’s just how Bing works.

A quick remedy for this particular bot is to set a crawl-delay directive in your robots.txt file. This directive can be also be used for Yahoo! and Yandex, however, Google’s bots ignore this. Google can be controlled in Google Search Console. The only other major bot to mention is Baidu – it also ignores the crawl-rate directive and must be managed in their Webmaster tools.

Spam/Rogue Crawler – .htaccess (server settings)

Now that we’ve reviewed the big name bots, let’s look at spam and rogue bots/crawlers. These can be much harder to block or eliminate. Once you’ve cleaned up the good bots, it’s time to investigate spam or junk hitting your website.

First, determine which server hits/crawls are spam or junk. For this you need a list of IP addresses and any bot names associated with each IP hitting your website. Next, check those against a database of spammers. We use the CleanTalk Web Spam Database for our checks, but there are several tools and checkers online.

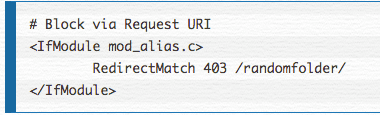

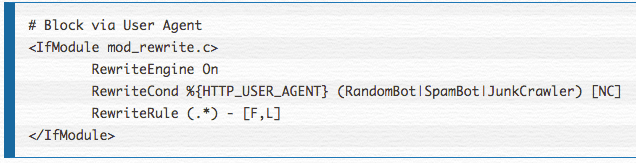

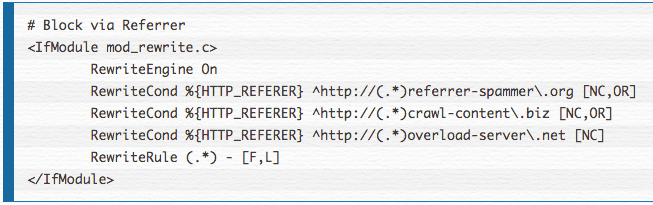

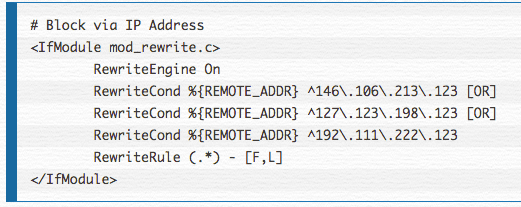

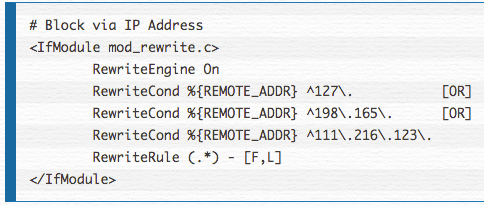

Once you have narrowed down the spam, it’s time to block it. This can be difficult and should only be done by someone familiar with htaccess files and server settings. Bots can be blocked via request URI, user agent, referrer, or IP address. Depending on what your log file has provided you can determine the best method to use.

Here are some examples:

Request URI

User Agent

Referrer

IP Address (More than one IP)

IP Address (Range of IPs)

Any single one or combination of these help block spam. If you are not using Apache (htaccess), then work with your hosting provider to determine how to configure these settings in your specific environment. Otherwise, a CDN and/or firewall on your website may be the best solution. Tools such as SiteLock and Securi keep up to date repositories of spam bots and IPs and block them before they ever get to your website. This can also be combined with htaccess configurations but may not be necessary when using a firewall or security service.

Once these settings or services have been configured you should see server performance return to normal. You may even see a SERP gain thanks to having a technically sound website. Here at periscopeUP we specialize in complex spam and bot issues, if this is something you need help with, please reach out to us and we’ll work with you to solve your problems.