Earlier this year periscopeUP’s SEO technical team started looking into server log files to help our clients with their technical SEO. The biggest issue we ran into was getting permission to access these files. The words “log file” tend to scare both business and technical people — mainly because these files are stored on the server and report on everything that happens to your server and website.

Earlier this year periscopeUP’s SEO technical team started looking into server log files to help our clients with their technical SEO. The biggest issue we ran into was getting permission to access these files. The words “log file” tend to scare both business and technical people — mainly because these files are stored on the server and report on everything that happens to your server and website.

In most cases, this information is usually not sensitive. Once our clients gave us permission to access their log files, we realized they had a lot of useful data regarding SEO.

Make your webpage a target Google can’t miss. Contact us now.

What Is A Log File?

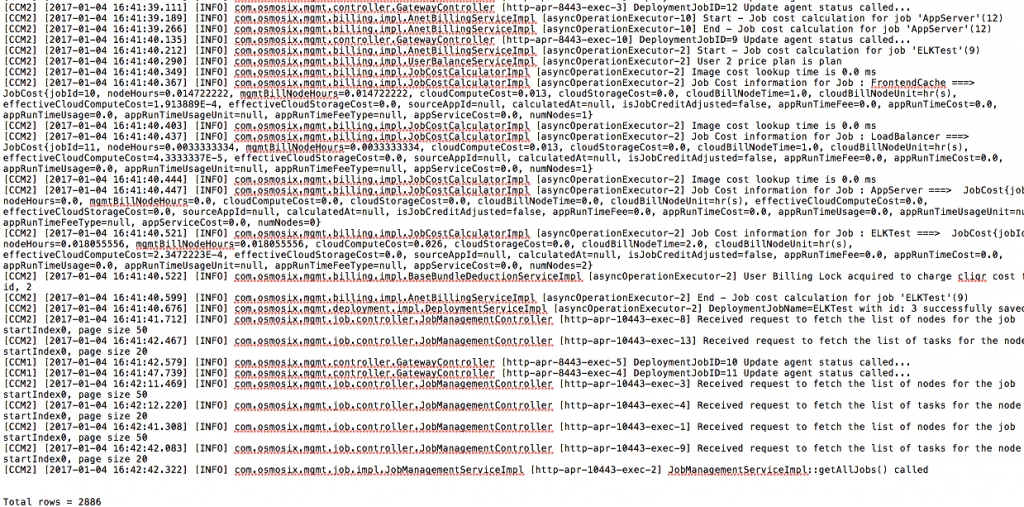

Let me start out by explaining and showing you what a log file looks like without any tools, just a text editor. For SEO purposes we really only need the apache log files. These files will include: timestamps, request types, URLs, Device info, HTTP response, and much more depending on the detail your server is set to track. For SEO, what we need to look for in these files are bot hits, URLs, timestamps, and http status response codes.

As you can see from the screenshot, it’s not easy to find the data we’re looking for, and the larger the site the bigger these files get. At this point we knew we needed a tool to decipher these files.

Our SEO Log File Tool Recommendations

There are a number of tools out there like Logz.io, Splunk, ScreamingFrog Log Analyzer, and many more for reviewing log files. Fortunately, DeepCrawl recently released an update to their crawler to import a log file into an existing crawl report. We already use DeepCrawl to help us analyze our clients’ websites, so this new feature was a no-brainer.

You can’t just upload a raw log file — so this led us back to ScreamingFrog Log Analyzer. Using this tool we were able to export the log data into a clean csv format and upload it into a DeepCrawl report. Now we can see the data in a more manageable and friendly way.

Note: We recommend at least 1, if not 2, full months of log data to get a good picture of your bot hits for SEO technical analysis.

So Much Data, What To Do With It?

Now that we have the data in DeepCrawl, how do we make it useful? It’s really a case-by-case process, but we do have some suggested next steps.

Bot hit data reported on inside DeepCrawl:

- No Bot Hits

- Low Bot Hits

- Medium Bot Hits

- High Bot Hits

- Pages With Desktop Bot Hits

- Pages With Mobile Bot Hits

Issues that are reported on inside DeepCrawl:

- Error Pages With Bot Hits

- Non-Indexable Pages With Bot Hits

- Disallowed Pages With Bot Hits

- Disallowed Pages With Bot Hits (Uncrawled)

- Pages Without Bot Hits In Sitemaps

- Indexable Pages Without Bot Hits

- Desktop Pages With Low Desktop Bot Hits

- Mobile Alternates With Low Mobile Bot Hits

We recommend focusing on “Error Pages” and “Non-Indexable Pages with Bot Hits” first. Error pages with bot hits are pretty straight forward — these should be corrected with redirects. Non-indexable pages with bot hits should be blocked by the robots.txt file if possible.

Next, we suggest looking into “Pages Without Bot Hits In Sitemaps” and “Indexable Pages Without Bot Hits”. These two areas may not have enough log file data making them a bit incomplete. We’ve found that a bot may hit a page only once a month or even once every few months.

Without enough data, you may have to wait a few more weeks and download more log file data. Pages from the sitemap that are continuously not being hit by bots should be checked for duplicate content or lack of content issues. Indexable pages without bot hits should be handled in the same manner. Also, do a quick Google search of the URL to see if it shows up in SERPs. This means it has been hit at least once in the past and is being indexed.

What if you find a page not being hit by a bot, and you determine it’s not one of the issues above? Use your robots.txt file to tell certain bots to hit the URL specifically. Still, there’s no guarantee it will be indexed by this method.

To Be Continued…

Other areas may be used for further investigation and SEO needs; as you can tell, there’s a lot that can be uncovered. We suggest starting with the above and gradually diving into each area a little deeper.

As we expand our knowledge of log files analysis and technical SEO, we will be sharing this with our readers.

If you need help with log file analysis or any other technical SEO please contact us today!